Articles

Dan Brown: Understanding the gaps between academia and policymaking

Dan Brown: Understanding the gaps between academia and policymaking

Academic studies don’t always estimate the parameters that will be most useful to us as we try to understand the cost-effectiveness of charities’ interventions. Even when they do, it may be difficult to figure out how those estimates apply to a specific charity’s program. Dan Brown, a senior fellow at GiveWell, uses examples from his own projects to demonstrate the depth of this challenge — and suggests ways to get practical value from academic insights.

Below is a transcript of Dan’s talk, which we’ve lightly edited for clarity. You also can watch it on YouTube and discuss it on the EA Forum.

The Talk

Thanks, Nathan, for the introduction. As I mentioned, I'm from GiveWell. We're a nonprofit organization that searches for the most cost-effective giving opportunities in global health and development. We direct approximately $150 million per year to our recommended top charities, and we publish all of our research online, free of charge, so that any potential donor can take a look for themselves.

In order to evaluate the cost-effectiveness of a charity’s intervention, we need to extrapolate estimates of the effect of that intervention from academic studies that were conducted in a different context. In this presentation, I'm going to discuss some of the challenges that arise when we try to do that. This is known as the problem of external validity or generalizability.

I'm by no means the first person to talk about this problem — not even the first at this conference —but before I started working at GiveWell about 18 months ago, I don't think I really appreciated what it looks like to try to tackle it in practice, or quite how difficult it can be. So over the next 20 minutes, I'm going to run through two case studies from projects that I've worked on and [share] the kinds of questions that come up when we're trying to understand external validity in practice. In doing so, hopefully I'll also give you a sense for what some of the day-to-day work looks like on the research team at GiveWell.

Case Study 1: GiveDirectly

One of our recommended top charities, GiveDirectly, provides unconditional cash transfers to households in Kenya, Uganda, and Rwanda. And one of the potential concerns with these cash transfers is they may cause a negative spillover effect on the consumption levels of individuals who don't receive the transfers themselves. So we investigated this possibility.

For the purposes of this presentation, I'm going to focus on across-village spillover effects only. In other words, do people experience a decrease in their consumption in real terms because the village next to them received cash transfers? And I'm going to focus on one of the main mechanisms for these across-village spillover effects on consumption: a change in prices.

Following a cash transfer, there will be an increase in demand and prices for goods in the treated villages, and that creates an arbitrage opportunity. An individual could purchase a good in an untreated village, transport it to a treated village, sell it at a higher price, and make a profit. In doing so, they're going to increase demand for the good in the untreated village, and so bid the price up there as well. And that's going to make households in the untreated villages worse off.

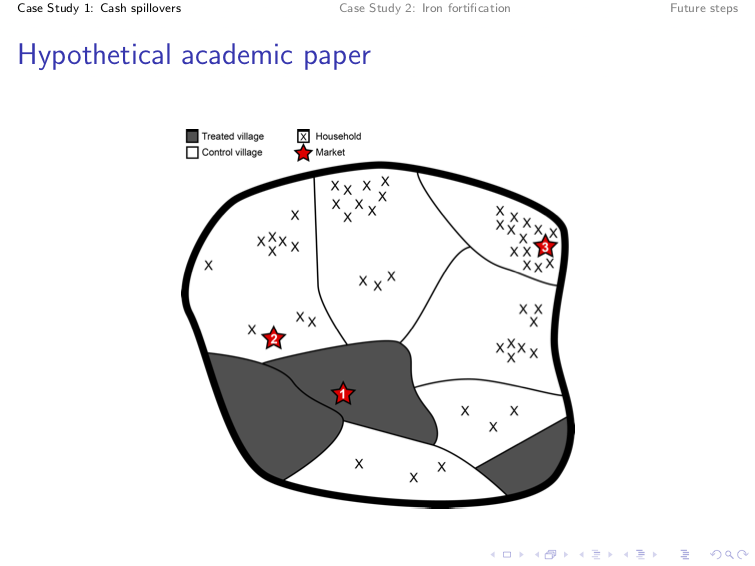

A bit of context: Some academic studies have tried to estimate these spillover effects, often using something that's known as a randomized saturation design. And that works broadly as follows:

Villages are organized into clusters, and for illustration, I've drawn two clusters on this figure [see above slide]. In some clusters, one-third of villages are treated. These are known as low-saturation clusters, like the one on the left, and in other clusters, two-thirds of villages are treated. They are known as high-saturation clusters, like the one on the right. Which clusters are assigned to low- and high-saturation status is determined randomly. Then, within each cluster, which villages are assigned to the treatment group and the control group is also determined randomly. The treated villages here are the villages that are shaded in gray.

Using this design, we can take the consumption of a household in an untreated village in a high-saturation cluster and compare it to the consumption of a household in an untreated village in a low-saturation cluster. The difference in their consumption is going to [reveal] the effect of being surrounded by an extra one-third of treated villages. And within this design, we can also estimate spillover effects at different distances.

The question, then, is how do we take an estimate of a spillover effect from an academic study like this and apply it to a specific charity’s program, like GiveDirectly’s, in practice and in some other context? I'm going to discuss two difficulties that come up when we try to do this.

Let's take a hypothetical academic study. Suppose we've been able to estimate the effects of being surrounded by an extra one-third of treated villages or having an extra one-third of treated villages in your region. As before, the treated villages are shaded in gray and the black Xs indicate the households that live in the untreated villages. The red stars indicate the three marketplaces that exist in this region. So following a cash transfer, there'll be an increase in prices in the market in the treated village, which [in the slide above] is labeled as “Market 1.”

The extent to which prices arbitrage across markets depends on how easy it is to transport goods between them. Market 2 is very close to Market 1; it's very easy to transport goods there, and so prices are going to increase in Market 2, and the households that are served by Market 2 are going to experience a decrease in their consumption in real terms. But Market 3 is much farther away from Market 1. It's much harder to transport goods to Market 3, and so accordingly, prices aren't going to increase much there, and the houses served by that market won’t be affected much by the cash transfers in the treated villages. So in this academic study, we might expect to see a fairly limited spillover effect on the consumption of households in the untreated villages.

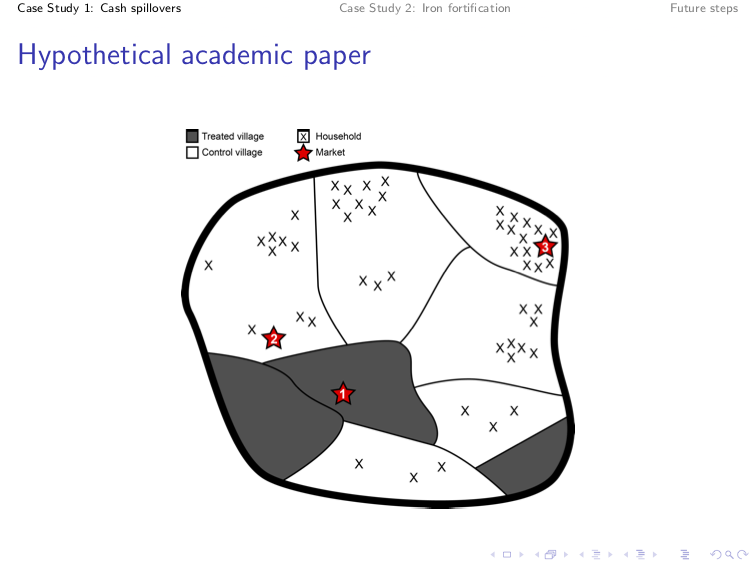

But let's suppose that our charity operates in a different setting, [and we] specifically assess where markets are more integrated economically. Suppose there's now a train line that links Market 1 to Market 3 in a charity setting. It's now much easier to transport goods from Market 1 to Market 3, and so prices will increase accordingly in Market 3, and the households that are served by it are now going to experience a decrease in their consumption in real terms. So in this charity setting, we'd expect to see quite a bit higher spillover effect on the consumption of households in the untreated villages than in the academic study setting.

This is obviously a very simplified example, but the point is that if we want to extrapolate a spillover effect from an academic study to a specific charity’s program, we need to take into account the degree to which markets are economically integrated. And if we fail to do that, we're going to arrive at inaccurate predictions about the size of spillover effects.

Okay, so how do we deal with this in practice? Unfortunately, amongst the academic studies that we've reviewed, we've not seen empirical evidence to tell us the extent to which the decline of spillover effects with distance depends on the extent to which markets are economically integrated. So ultimately, we're probably going to need further academic study of this.

For the time being, what we've done is we have placed a much greater weight on studies that were conducted in contexts that are more like GiveDirectly's context in terms of the degree of markets’ integratedness. For example, we place very little weight on a study that was conducted in the Philippines, because that study covered markets across multiple islands, and we suspect that markets across islands are probably a lot less economically integrated than markets in GiveDirectly's setting.

Let's go back to the original hypothetical study setting to discuss a second challenge that comes up. Let's assume that prices arbitrage as they did originally. Prices increase in Market 2 following the cash transfer, but they don't increase much in Market 3.

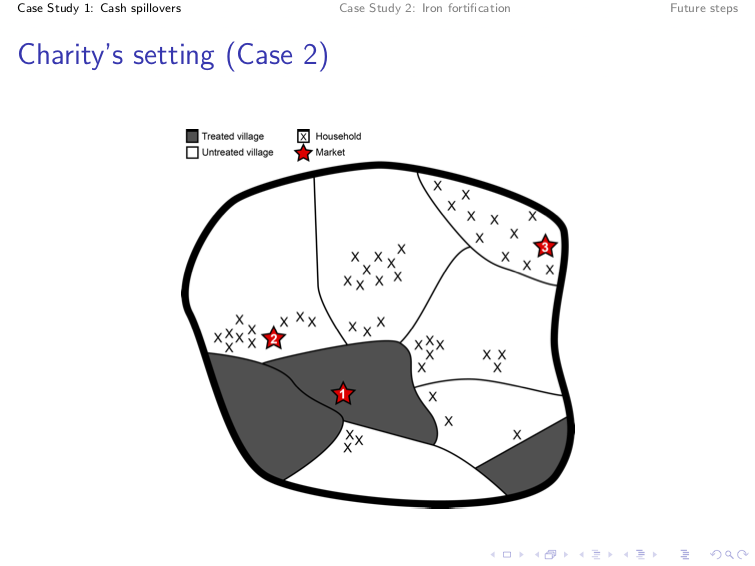

Now let's move to another charity setting, where the spatial distribution of households in our untreated villages is very different. In this figure, we can see that a much higher proportion of the households in the untreated villages live close to the treated villages, and are served by Markets 1 and 2, where we know that prices have increased. So again, in this charity setting, we'd probably expect to see quite a bit higher spillover effects on the consumption of households in the untreated villages compared to the academic study setting.

So if we want to extrapolate an estimate from the academic study to this specific charity’s program, we need to take into account the differences in the spatial distribution of households between these two different contexts. And a failure to do that, again, is going to lead us to quite inaccurate predictions about the size of spillover effects.

At an even more basic level, we just need to know the location of the households in the untreated villages. We need to know how many households are affected by spillover effects, because ultimately we want to aggregate a total spillover effect across all untreated households.

One difficulty that arises here is that even though the academic studies do collect information on the location of households in untreated villages, unfortunately this isn't something that GiveDirectly collects. This is fairly understandable. We wouldn't expect a charity to spend a lot of additional resources to collect information in areas where it doesn't even implement its program. But it does make it very difficult for us to extrapolate these academic study estimates to predict the effect of GiveDirectly's program.

We can't do a lot to deal with this at the moment. In the future, we’ll try to find some low-cost ways of collecting information on the location of these households in the surrounding villages. But hopefully, the bigger point that you take from this first case study is that if we want to extrapolate the effect of an academic study to a specific charity’s program, we need to think carefully about the mechanism that's driving the effect — and then we need to think about contextual factors in a specific charity setting that might affect the way that mechanism operates. And often we go and find additional data or information from other sources in order to make some adjustments to our model.

Case Study 2: Fortify Health

Another example of this comes from a project that we undertook to evaluate Fortify Health's iron fortification program in India. Fortify Health provides support to millers to fortify their wheat flour with iron in order to reduce rates of anemia.

We found a meta-analysis of randomized controlled trials that has specifically estimated the effect of iron fortification. And the question again for us is: how do we take this academic estimate of the effect of iron fortification and use it to predict the effects of Fortify Health's programs in some other context?

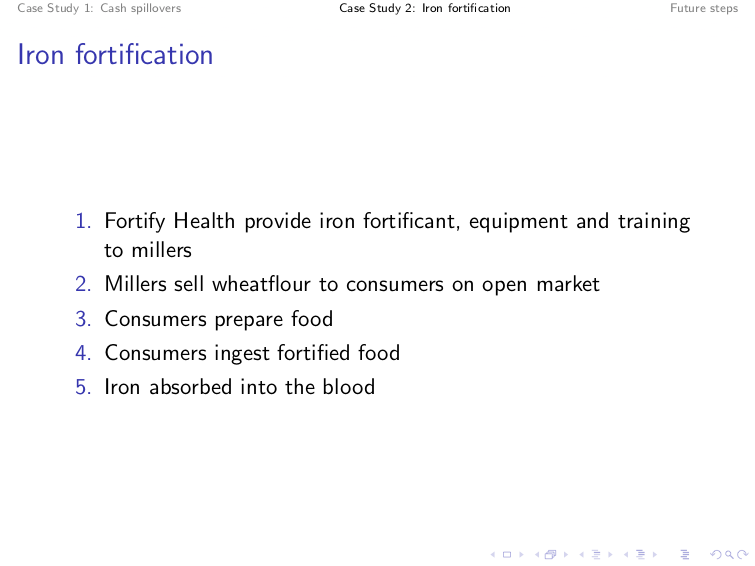

The first step is to outline exactly how this program works. I'll give you a quick overview. Fortify Health provides an iron fortificant to millers. This is essentially a powder that contains the additional iron. They also provide equipment and training so that the millers can integrate that fortificant into their production process and put the iron into the wheat flour. Millers will then sell this fortified wheat flour on the open market.

Consumers purchase the wheat flour and use it to prepare food items like roti. They'll then eat the food and ingest the iron, which needs to be absorbed into the blood before it can have any health effect.

We make adjustments in our cost-effectiveness model for issues that come up in the first three steps. So it may be the case that some of the fortified wheat flour is lost because it goes unsold on the shelves in the market, or because some is wasted during food preparation.

But let's just zoom in on the last two steps — the point at which consumers are actually eating the fortified wheat flour. Can we take the academic study, the meta-analysis estimate of the effect of iron fortification, and assume it's a reasonable guess for the effects of Fortify Health's programs, specifically?

There are a few reasons why it might not be. The first is that the quantity of iron ingested through different iron fortification programs can vary quite a bit. It may be that different programs add a different amount of iron to a given quantity of food. Or it may be that in different contexts, consumers eat a different amount of the fortified food.

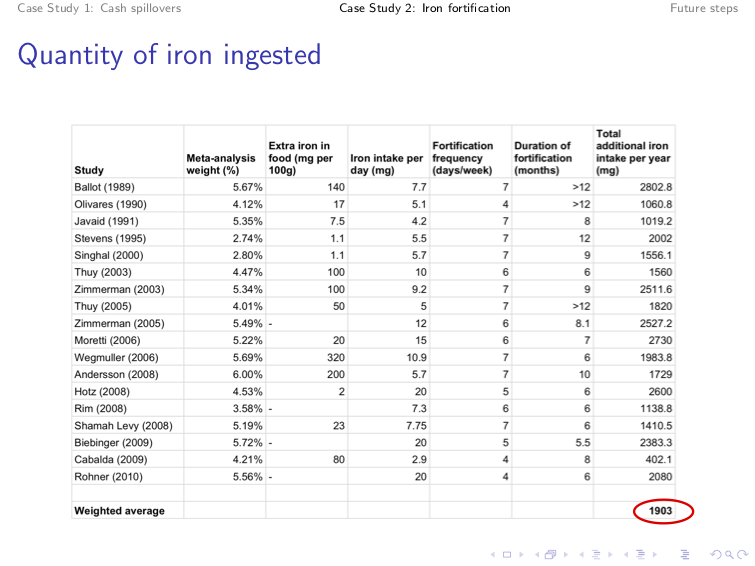

So we went back to each of the 18 academic studies underlying the meta-analysis, and in each case we backed out the total additional amount of iron that consumers ingest as a result of the fortified food. That required taking information on the quantity of iron that's added per 100 grams of the food, the amount of the additional fortified food a person eats per day, the number of days per week that they consume the fortified food, and the duration of the program. In this table, we've aggregated things annually, but we've done this for different lengths of time as well. And what we found is that the average participant in the academic studies consumes an additional 1,903 milligrams of iron per year as a result of this fortified food.

We compared this to our best guess for the additional iron that's ingested through Fortify Health's program. And we believe that the average consumer in Fortify Health's program will only ingest about 67% as much additional iron through the fortified food as the average participant in the academic studies. So at first glance, it seems like Fortify Health's program is probably going to have quite a bit less of an effect than the effects in the academic meta-analysis.

But what matters is not the amount of iron that you ingest, but the amount of iron that's absorbed into your blood. It's only when it's absorbed into the blood that it can have an effect on your health. And the amount that you absorb into the blood depends on several additional factors.

The first of these is the fortification compound that's used to deliver the iron into the body. Fortify Health uses something that's known as sodium iron EDTA [edetate disodium], but the academic studies use a range of different compounds — things like ferrous sulfate, ferrous fumarate, ferric pyrophosphate, and a lot of other long science words.

So we went back to the academic literature and we asked, “Are there any studies that have tried to estimate the difference in absorption rates across fortification compounds?” And more specifically, we looked at evidence from what are known as isotopic studies. These are studies that provide participants with food that's fortified using different compounds. They label the iron. When you take a blood draw from participants after they have consumed the fortified food, you can trace exactly which iron fortification compound it came from. And using that, you can back out an absorption rate for each fortification compound.

This table [above] comes from a literature review that was conducted by Bothwell and McPhail. If you look at the third column in the top row, they estimate that the rate of iron absorption from sodium iron EDTA, which is Fortify Health's compound, is about 2.3 times the rate of iron absorption from ferrous sulfate, which is one of the compounds that's used in the academic studies in wheat products. More generally, we found that the rate of iron absorption from sodium iron EDTA is quite a bit greater than the rate of iron absorption from the compounds that are used in the academic studies.

When we make an adjustment for this, in addition to the adjustment that we made previously for the differences in quantities of iron, we predict that the average consumer in Fortify Health's program actually absorbs about 167% as much iron into the blood as the average participant in the academic studies. And so actually, we'd expect Fortify Health's program to have quite a bit greater effect than the effect that was estimated in the meta-analysis in the academic literature.

But we're not done yet. There are at least two additional factors that affect the absorption of iron. The first is the substances that you consume alongside the fortified food. Some substances will inhibit the rate of absorption and others will enhance the rate of absorption. We're particularly worried here about tea consumption. It's been shown in several studies that tea significantly inhibits the absorption of iron even when it's delivered through the sodium iron EDTA compound. And secondly, we're worried about the potential differences in baseline iron levels between the participants in the academic studies and the consumers of Fortify Health's program.

Take this with a pinch of salt, because we've not investigated it deeply yet, but there's some suggestion in the academic literature that the rate of iron absorption is greater when your initial baseline iron levels are lower. Unfortunately, we don't have information on the diets of either the consumers of Fortify Health's program or the participants in the academic studies. And we also don't have information on the baseline iron levels of Fortify Health's consumers. So for the time being, we've not been able to make additional adjustments for these two factors. We'd certainly like to get more information on this if we can in the future, particularly for the consumers of Fortify Health's program.

But hopefully the bigger point that you take from this case study is that if we want to extrapolate the effects from an academic study — in this case, of iron fortification — to a specific charity’s program, we need to think about the mechanism that's driving the effects of the program, and then consider specific local conditions that might affect how that mechanism operates in the charity setting. And then we potentially need to go and find additional data or other academic literature in order to make some adjustments to our model.

What's more, this kind of issue arises even when we’re trying to understand the purely biological effects of what seems like a fairly straightforward health intervention like iron fortification. We might expect these concerns and questions to be a lot more difficult if we're considering a more complicated behavior intervention — say, something like an intervention to increase educational attainment.

How can we do better in the future? Hopefully, you can see from these two case studies that the issues and questions that arise depend a lot on the intervention and the mechanism driving the effect of that intervention. It’s hard to suggest general prescriptions. But we think there are at least two ways that we can be in a better position in the future:

- We'd like to better understand what other policy organizations are doing to tackle these external validity questions. We've spoken to some other organizations and are aware of a few other approaches, such as J-PAL’s. But we'd like to see a few more concrete examples of exactly what steps other organizations are taking to make these external validity adjustments in practice — perhaps something like the case studies that have been laid out here, but with a bit more detail.

- We'd like to see academics spend more time discussing the contextual factors that arose in the study settings they’ve worked in. Our understanding is that most academics spend the vast majority of their time focusing on ruling out threats to internal validity. That's understandable. I don't think that [addressing some of the questions I’ve raised here] will get your work accepted by a better publication or a higher-impact journal. So perhaps we need to think of ways to incentivize academics to do a bit more [work] on external validity.

But hopefully, you have more of a feel for the kinds of questions that come up when you're trying to work out what adjustments you need to make for external validity if you're working for an organization like GiveWell. And if you have any thoughts on these two questions or any other questions about the presentation, I know we've got a little bit of time for Q&A now. Thanks.

Nathan Labenz: I guess [I’ll start with] a simple question. I don't think you mentioned what the actual results are on the spillover, so I was just curious to get a little bit of information about that.

Dan: Yeah. This is tricky. What we wanted to do originally was what I hinted at in the second slide, which was to work out a spillover effect at each distance, then work out the total number of households that live at each distance, and then add up the spillover effects across all of the households to get the total spillover effect. We wanted to make this adjustment quantitatively.

Unfortunately, because we don't have the location of the houses in the untreated villages, we just can't do that. So for the time being, we've had to do the second-best thing, which is to make a qualitative judgment based on our [interpretation] of the most relevant studies to get the right context. In terms of the actual bottom line, that comes out at a 5% negative adjustment.

That is, as I say, much more of a qualitative, subjective guess than what we would like to have when making these kinds of adjustments. And that was basically because some of the studies found a negative effect, some found no effect, and some found a positive effect. And we didn't believe there was strong evidence, [when considering] the literature as a whole, that there were large negative or positive spillover effects. So unfortunately, we couldn't make the quantitative adjustments that we made in the Fortify Health case to the spillovers case. It wasn't as exact as we would've liked.

Nathan: So your best guess for the moment is that for every $1 given to a household, somebody not too far away effectively loses $0.05 of purchasing power?

Dan: I think it's more. We've built in the adjustment by decreasing the total value — or cost-effectiveness — of the program by 5%. I think it's not quite as explicit as hashing out the exact change in consumption, because again, we don't even know how many households are affected per treated household. It’s quite tricky.

Nathan: A striking aspect of your talk is that these issues are not obvious at all from the beginning. So when you sit down to attack a problem like this, how do you begin to identify the issues that might cause this lack of transferability in the first place?

Dan: I think the first step is to outline exactly how you think the program works. I guess some people call it a theory of change or whatever — the causal mechanism. Literally write out each of the steps that you think needs to operate for the program to work, and then address each one in turn. And think critically about what might go wrong. Always approach it from the perspective of “How do I think this [program] is going to fall down? What about the local context might mean that this step in the mechanism just doesn't operate?”

That's obviously quite hard to do if you're a desk-based researcher like we are GiveWell. So something that helps us quite a lot is to engage with the charities themselves. For this case, we engaged very closely with Fortify Health and they were able to raise some of these issues as well. Then, we could go away and try to find some data for some of the issues. So, I think some collaboration with the charities themselves [helps].

But that's part of the reason why I'd like to see academics discuss this more, because they may be in a better position to be aware of the contextual factors that really matter for the key mechanisms. They're conducting the field work on the ground in a way that's hard for us to do from a desk-based research position.

Nathan: So this question will probably apply [in varying degrees] to different programs, but I'm sure you've also thought about just going out and attempting to measure these things directly, as opposed to doing a theoretical adjustment. What prevents you from doing that? Is that just too costly and intensive, and would you essentially be replicating the studies? Or would you be able to [follow] a distinct process to measure the results of programs in a nonacademic setting?

Dan: Yeah. Hopefully, if you take this sort of approach, you don't need to replicate every single study in every different context in which you want to implement a charity’s program. And it's more a case of thinking about which step might fall down in a particular context and then maybe measuring the information that's directly relevant to that step, as outlined in the study.

I think we don't do this ourselves because we don't have the in-house expertise to do a lot of that data collection. But maybe we could outsource some of this work to other organizations. We'd have to price it out using a model of how cost-effective the research funding itself would be, given that it might affect the amount of money donated to some of the charities. But I think it's something we could definitely consider.

Nathan: Okay. Very interesting. We have a bunch of questions coming in from the [Bizzabo] app. Let me see how many we can get to. How do you account for different degrees of uncertainty or complexity in the causal chains across the interventions that you study? You showed one that had a five-degree causal chain. Seemingly you would prefer things that had two levels and disfavor those that had 10, but how do you think about that?

Dan: We don't have a rule [stating that] if there are too many steps, it's too complicated and we wouldn't consider it. In each case we would just lay out what [the steps] are, and then if we think that there's one step that's so uncertain that we really can't get any information on it at all, and we feel that it's going to have a massive impact on the bottom line, then we would just write that up and probably refrain from making any grants. We would just put a post out there saying, “We're very unsure about this at the moment and we need further academic study. We need to see this, this, or this before we can take a step forward.”

As you say, it'd be nice if everything was just a one-step [process], but I don't think we would ignore an intervention because it had quite a few steps and maybe more uncertainty.

Nathan: Which academics would you recommend as currently doing good work on the question of external validity?

Dan: Honestly, I'm not sure that I have specific names of people who I think are consistently doing a lot of this. I should have a better answer, but no one's jumping out.

Again, at the moment, it seems to me that in general, these kinds of questions are commented on in a paragraph or two in the discussion at the end of a paper, and it feels more like a throwaway comment than something that people have put a lot of thought into in academic studies. I'll dig out a name for you. I'll try and find someone.

Nathan: You've maybe [covered] this a bit already with my first question, but how do you decide how deeply to investigate a given link in the causal chain as you're trying to work out the overall impact of a program?

Dan: With everything we do, it's very hierarchical. We'll try and do an initial base overview — a very, very quick investigation. I think this applies to stuff more generally than to external validity adjustments at GiveWell. So we'll try to take a quick view of things and then get a sense of whether we really think it's going to have a big impact on the bottom line. The bigger impact we think it's going to have on the bottom line, the more willing we are to spend staff time going more deeply into the weeds of the question. So I think it's a very step-by-step process with the amount of time that we spend.

Nathan: Okay. A couple more questions: Let’s say you have a relatively recent and seemingly directly applicable RCT [randomized controlled trial] versus a broad scope of RCTs. Cash transfers would be a good example of this; they’ve been studied in a lot of different contexts. But maybe you can point to one study that's pretty close to the context of a program. How do you think about the relative weight of that similar study versus the broad base of studies that you might consider?

Dan: I think in some cases we actually do try to put concrete weights on each study. We don't want to confuse a study that’s just happening in the same country, or something like that, [with one that’s highly relevant]. If there's a study conducted in a different country, but we think that the steps in the causal chain are going to operate exactly the same because there's no real massive difference in the contextual factors, then we wouldn't massively downweight that study simply because it's conducted in a different context.

In terms of having a lot of different studies, if each of them has a little bit of weight, then that's going to drive some weight away from [a single] study that we think is very, very directly relevant.

But [overall], I think we just take it on a case-by-case basis and try, in some instances, to concretely place different weights on each study in some informal meta-analysis. And that involves thinking through each of the steps and what “similarity” really means — what specific local conditions need to be the same for [us to deem] a study as having a similar context.

Nathan: We're a little bit over time already, so this will have to be the last question. When you identify these issues that seem to imply changes, I assume they're normally in the “less effective” direction. You did note one that was moving things toward “more effective.” [In cases like that,] do you then go back and talk to the original study authors and communicate what you found? Has that been a fruitful collaboration for you?

Dan: That's not something we've done so far. It seems like something that maybe we should do a bit more in future, but certainly in the projects that I've worked on, [we haven’t] so far. So that's a good piece of advice.

Nathan: Good question. Well, it is always a lesson in epistemic humility when we talk to folks from GiveWell. You do great work and think very deeply, so thank you for that.