Articles

Psychology of Existential Risk and Long-Termism

Psychology of Existential Risk and Long-Termism

Consider three scenarios: scenario A, where humanity continues to exist as we currently do, scenario B, where 99% of us die, and scenario C, where everyone dies. Clearly option A is better than option B, and option B is better than option C. But exactly how much better is B than C?? In this talk from EA Global 2018: London, Stefan Schubert describes his experiments examining public opinion on this question, and how best to encourage a more comprehensive view of extinction’s harms.

A transcript of Stefan's talk is below, including questions from the audience, which we have lightly edited for clarity. You can discuss this talk on the EA Forum.

The Talk

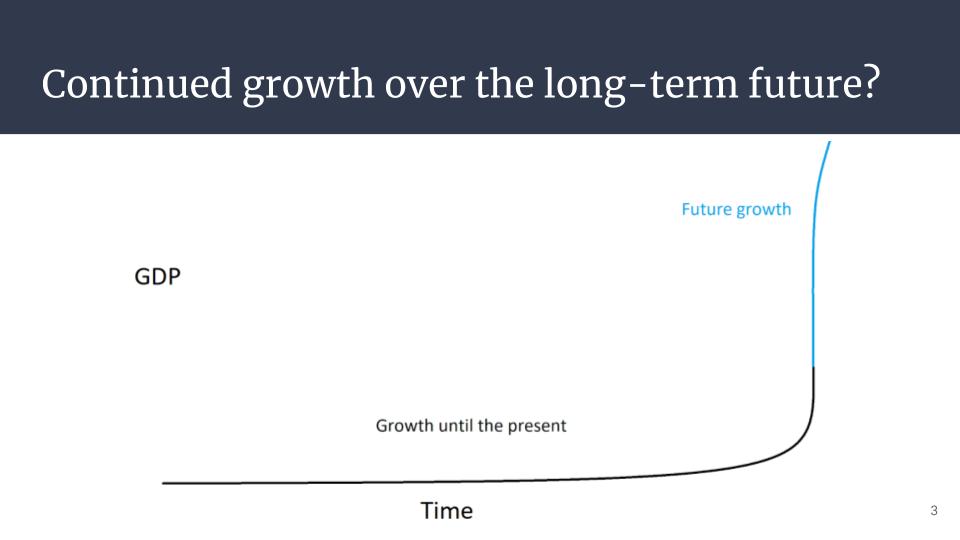

Here is a graph of economic growth over the last two millennia.

As you can see, for a very long time, there was very little growth, but then it gradually started to pick up during the 1700s, and then in the 20th century, it really skyrocketed.

So now the question is, what can we tell about future growth, on the basis of this picture of past growth?

Here is one possibility which is, perhaps, the one which is closest at hand, that growth will continue into the future and hopefully into the long-term future. And that will mean not only greater wealth, but also better health, extended life span, more scientific discoveries, and more human flourishing in all kinds of other ways. So, a much better long-term future in all kinds of ways.

But, unfortunately, that's not the only possibility. Experts worry that it could be that growth continues for some time, but then civilization collapses.

For instance, civilization could collapse because of a nuclear war between great powers or an accident involving powerful AI systems. Experts worry that civilization wouldn't recover from such a collapse.

The philosopher Nick Bostrom, at Oxford, has called these kinds of collapses or catastrophes "existential catastrophes." One kind of existential catastrophe is human extinction. In that case, the human species goes extinct, no humans ever live anymore. So that will be my sole focus here. But he also defines another kind of existential catastrophe, which is that humanity doesn't go extinct but its potential is permanently and drastically curtailed. I won't talk about such existential catastrophes here.

So, together with another Oxford philosopher, the late Derek Parfit, Bostrom has argued that human extinction would be uniquely bad, much worse than non-existential catastrophes. And that is because extinction would forever deprive humanity of a potentially grand future. We saw that grand future on one of the preceding slides.

So, in order to make this intuition clear and vivid, Derek Parfit created the following thought experiment where he asked us to consider three outcomes: First, peace; second, a nuclear war that kills 99% of the human population; and then third, a nuclear war that kills 100% of the human population.

Parfit's ranking of these outcomes, from best to worst, was as follows: peace is the best, near extinction is number two, and then extinction is the worst. So, no surprises so far. But then he asks a more interesting question: "Which difference, in terms of badness, is greater?" Is it the First Difference, as we call it, between peace and 99% dead? Or the Second Difference, between 99% dead and 100% dead? This is a piece of terminology that I will use throughout this talk, the First Difference and Second Difference, so it will be good to remember this terminology.

So which difference do you find the greater? That depends on what key value you have. If your key value is the badness of individual deaths or the individuals that suffer, then you're gonna think that the First Difference is greater, because the First Difference is greater in terms of individual deaths. But there is also another key value which one might have, and that is that extinction and the lost future that it entails is very bad. And of course, only the third of these outcomes, 100% death rate, means extinction and a lost future.

So only the Second Difference involves a comparison between an extinction and a non-extinction outcome. So this means if you focus on the badness of extinction and the lost future it entails, then you are gonna think that the Second Difference is greater.

Parfit hypothesized that most people will find the First Difference to be greater because they're gonna focus on the individual deaths and all the individuals that suffer. So this is, in effect, a psychological hypothesis. But his own ethical view was that the Second Difference is greater. So, in effect, that means that extinction is uniquely bad, and much worse than a non-existential catastrophe. And that is because Parfit's key value was the lost future that human extinction would entail.

So then, together with my colleagues, Lucius Caviola and Nadira Faber, at the University of Oxford, we wanted to test this psychological hypothesis of Parfit's, namely that people don't find extinction uniquely bad.

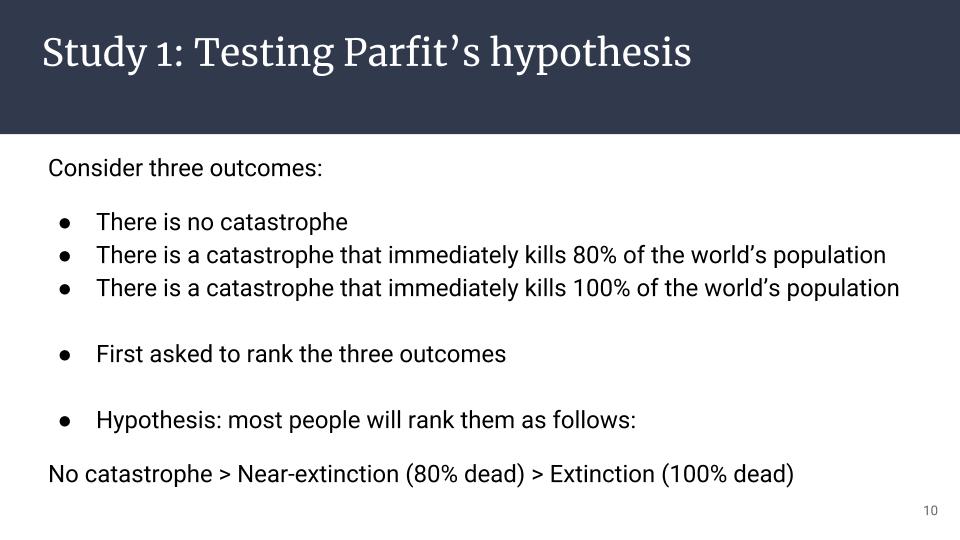

So we did this using a slightly tweaked version of Parfit's hypothesis. We asked again people on different online platforms to consider three outcomes. But the first outcome wasn't peace, because we found that people had certain positive emotional associations with the word "peace" and we didn't want that to confound them. Instead we just said there's no catastrophe.

With regards to the second outcome we made two changes; we replaced nuclear war with a generic catastrophe because we weren't specifically interested in nuclear war, and then we reduced the number of deaths from 99% to 80% because we wanted people to believe that it's likely that we could recover from this catastrophe. And then the third outcome was that 100% of people died.

We first asked people to rank the three outcomes. Our hypothesis was that most people would rank these outcomes as Parfit thought that one should, namely that no catastrophe is the best and then near extinction is second and extinction is the worst.

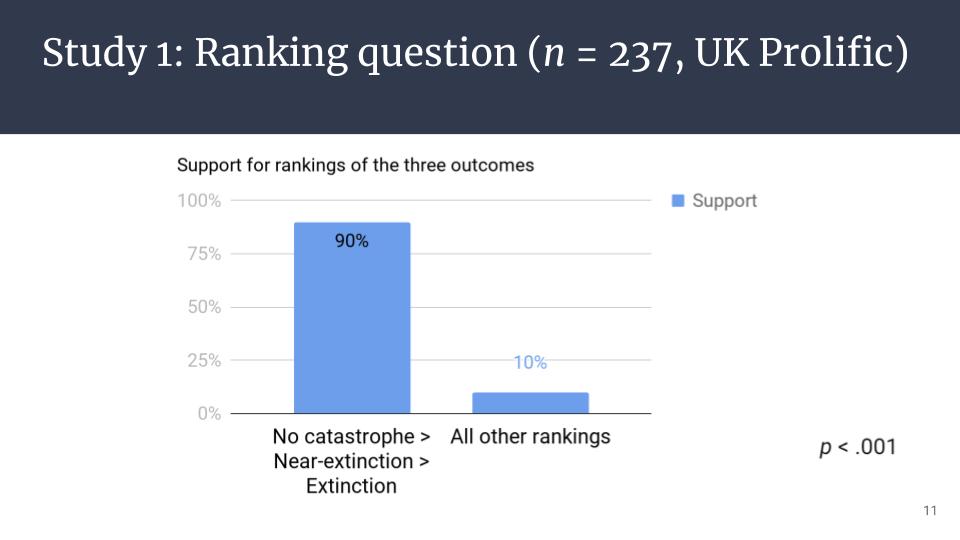

This was indeed the case. 90% gave these rankings and all other rankings only got 10% between them. But then we went on to another question, and this we only gave to those participants who had given these predicted rankings. The other 10% were out of the study from now on.

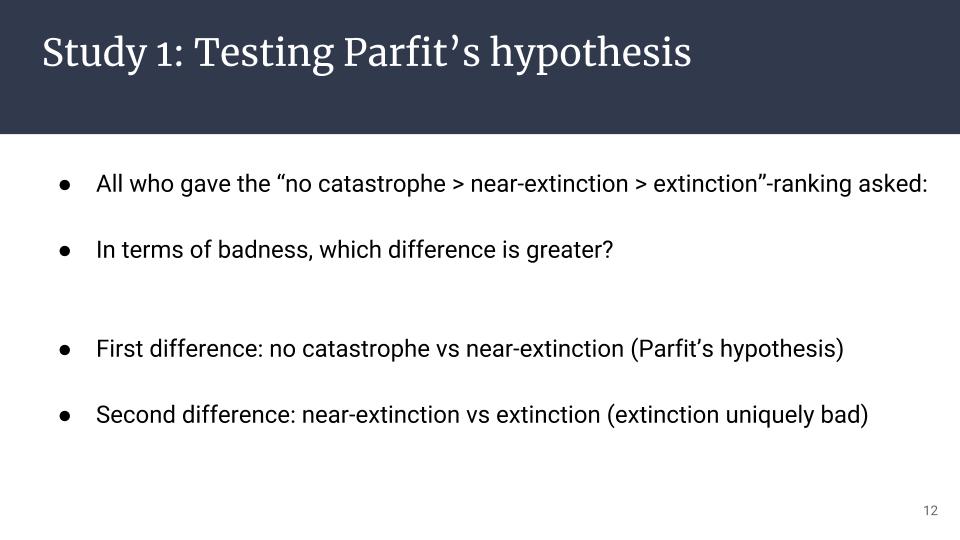

We asked, "In terms of badness, which difference is greater, the First Difference between no catastrophe and near extinction?" And as you'll recall, Parfit's hypothesis was that most people would find this difference to be greater, "Or the Second Difference between near extinction and extinction?" Meaning that extinction is uniquely bad.

And then we found that Parfit was, indeed, right. A clear majority found the First Difference to be greater and only minority found extinction to be uniquely bad.

So then we wanted to know "Why is it that people don't find extinction uniquely bad?" Is it because they focus very strongly on the first key value, they really focus on the badness of individual deaths and individual suffering? Or is it because they focus only weakly on the other key value, on the badness of extinction and the lost future which it entails?

So we included a series of manipulations in our study to test these hypotheses. Some of these decreased the badness of individual suffering, and others emphasized or increased the badness of a lost future, so they latched on to either the first or the second of these hypotheses. So this meant that the condition which I've shown you the results from, that acted as a control condition, and then we had a number of experimental conditions or manipulations.

In total, we had more than twelve hundred participants in the British sample, making it a fairly large study. We also ran another study which was identical on the US sample, and that yielded similar results, but I will here focus on the larger British sample.

Our first manipulation involved zebras. So here we had exactly the same three outcomes, like in the control condition, only that we replaced humans with zebras. So our reasoning here was that people likely empathize less with individual zebras; they don't feel as strongly with an individual zebra that dies as they do with an individual human that dies. So, therefore, there would be less focus on individual suffering, the first key value; whereas, people might still care pretty strongly about the zebra species, we thought, so extinction would still be bad.

So overall this would mean that more people would find extinction uniquely bad when it comes to zebras. That was our hypothesis, and it was proved true. A significantly larger proportion of people found extinction uniquely bad when it comes to zebras, 44% versus 23%.

So then our second manipulation, here we went back to humans, but what we changed was that the humans were no longer getting killed, but, instead, they couldn't have any children. And of course if no one can have children then humanity will eventually go extinct.

So here, again, we felt, we thought, that people will feel less about sterilization than about death. So then there would be less of a focus on the first key value, individual suffering. Whereas extinction and the lost future that it entails is as bad as in the control condition.

So overall this should make more people find extinction uniquely bad when it comes to sterilization. And this hypothesis was also proved true. So here we found that 47% said extinction was uniquely bad in this condition. Again, that was a significant difference compared to the control condition.

And then our third manipulation was somewhat different. So here we had, again, the three outcomes from the control condition. But then after that we added the following text: "Please remember to consider the long term consequences each scenario will have for humanity. If humanity does not go extinct, it could go on to a long future. This is true even if many, but not all, humans die in a catastrophe, since that leaves open the possibility of recovery. However, if humanity goes extinct, there would be no future for humanity."

So the manipulation makes it clear that extinction means no future and non-extinction may mean a long future. So it emphasizes the badness of extinction and losing the future, and has an effect on that key value, whereas, the other key value, the badness of individual suffering, isn't really affected.

So overall it should, again, make more people find extinction uniquely bad. So here we found a similar effect as before, so 50% now found extinction to be uniquely bad. So in the salience manipulation, we didn't really add any new information, we just highlighted certain inferences which one, in principle, could have made even in the control condition.

But we also wanted to include one condition where we actually added new information. We called this the good future manipulation. So here in the first outcome we said not only that there is no catastrophe, but also, humanity goes on to live for a very long time in a future which is better than today in every conceivable way. There are no longer any wars, any crimes, any people experiencing depression or sadness and so on.

So, really a utopia. And then the second outcome was very similar, so, here of course, there was a catastrophe, but we recover from the catastrophe, and then go on to the same utopia. And then the third outcome was the same, but we also really emphasized that extinction means that no humans will ever live anymore and all of human knowledge and culture will be lost forever.

So this was really a very strong manipulation. We hammered home the extreme difference between these three different outcomes, so, I think this should be remembered when we look at the results here. Because here we found quite a striking difference.

This manipulation says then that the future will be very good if humanity survives, and that we would recover from a non-extinction catastrophe. So the manipulation then makes it worse to lose the future, so it affects that key value. But the other key value, the badness of individual suffering, is not affected.

So, overall, this should make more people find extinction uniquely bad, and that's really what we found here. 77% found extinction uniquely bad given that we would lose this very great future indeed.

So let's sum up then, what have we learned from these four experimental conditions about why people don't find extinction uniquely bad in the control condition. So one hypothesis we had was that this was because people focus strongly on the badness of people dying from the catastrophes. And this is something that we find is true, because when we reduce the badness of individual suffering, as we did in the zebra manipulation and the sterilization manipulation, then we do find that more people find extinction to be uniquely bad.

Our second hypothesis was that people don't feel that strongly about the other key value, the lost future; and we found some support for that hypothesis as well, because one reason why people don't feel as strongly about that key value is that they don't consider the long-term consequences that much. And we know this because when we highlight the long-term consequences, as we did in the salience manipulation, then more people find extinction uniquely bad.

Another reason why people focus weakly on the lost future is that they have certain empirical beliefs which reduce the value of the future. So they may believe that the future will not be that good if humanity survives. And they may believe that we won't recover if 80% die. And we know this because when we said that the future will be good if humanity survives, and that we will recover if 80% die, as we did in the good future manipulation, then more people found extinction uniquely bad.

More briefly, I should also present another study that we ran involving "X-risk reducer sample." So this is a sample of people focused on reducing existential risk, and we produced this sample via the EA Newsletter and social media and some of you may have taken this test actually, so if so I should thank you for helping our research.

So here we had only two conditions. We had the control condition and we had the good future condition. We hypothesized that nearly all participants would find the second difference greater both in the control condition and in the good future condition. So nearly all participants would find extinction uniquely bad.

And this was, indeed, what we found, so that's a quite striking difference compared to laypeople, where we found a big difference between the good future condition and the controlled condition. Among the x-risk reducers, we find that they find extinction uniquely bad even in the absence of information about how good the future is gonna be.

So that sums up what I had to say about this specific study. So let me now zoom out a little bit and say some words about the psychology of existential risk and the long-term future in general. We think that this is just one example of a study that one could run, and that there could be many valuable studies in this area.

And one reason why we think that is that it seems that there is a general fact about human psychology, which is that we think quite differently about different domains. So one example of this, which should be close to mind for many effective altruists, is that we think, or people in general think, very differently about charitable donations and individual consumption; so it seems that most people think much more about what they get for their money when it comes to individual consumption compared to a charity.

So similarly, it may be that we think quite differently when we think about the long-term future compared to when we think about shorter time frames. So it may be, for instance, that if we think about the long-term future we have a more collaborative mindset because we realize that, in the long-term, we're all in the same boat.

I don't know whether that's the case. I'm speculating a bit here, but I think we have some prior, which should be quite high, that we do have a unique way of thinking about the long-term future. And it's important to learn whether that's the case and how we do think about the long-term future.

Because, ultimately, we want to use that knowledge. We don't just want to gather it for its own sake. But we want to use it in the project that many of you, thankfully, are a part of, to create a long-term future.

Questions

Question: What about those 10% of people who kind of missed the boat, and got the whole thing flipped? Was there any follow up on those folks? And what are they thinking?

Stefan: I think that Scott Alexander at some had a blog post about how you always get this sort of thing on online platforms. You always get some responses which are difficult to understand. So you very rarely get a 100% yes on anything. I wouldn't read in too much in to those 10%.

Question: Were these studies done on Mechanical Turk?

Stefan: Yeah, so we run many studies on Mechanical Turk, but actually the main study that I presented here, that was on a competitor to Mechanical Turk, which is called Prolific. And then we recruited British participants; on Mechanical Turk we typically recruit American participants. And I said at one point we also actually ran a study on American participants so we just sort of replicated the study that I now presented. But what I presented concerned British participants on Prolific.

Question: Were there any differences in zebra affinity between Americans and Britons?

Question: Did you consider a suffering focused manipulation, to increase the salience of the First Difference?

Stefan: That's an interesting question. No, we have not considered such a hypothesis.

I guess in the sterilization manipulation there is significantly less, there is substantially less suffering involved. What we say is that 80% can't have children and the remaining 20% can have children, so there seems to be much less suffering going on in that scenario compared with the other scenarios. But I haven't thought through all the implications of that now, but, that is certainly something to consider.

Question: Where do we go from here with this research?

Stefan: Yeah, I mean, I guess one thing I found interesting, was, as I said, the good future manipulation is very strong and so it's not obvious that quite as many would find extinction uniquely bad if we made that a bit weak.

But that said, we have some converging evidence to the conclusions that I said there, that is we had actually one other pre-study where we asked people more directly "How good do you think the future will be if humanity survives?" And then we found that they thought the future is gonna be slightly worse than the present. Which seems somewhat unlikely on the basis of the first graph that I showed that the world has arguably become better. Some people don't agree with that, but, that would be my view.

So it seems, in general, that one thing that stood out to me that was that people are probably fairly pessimistic about the long-term future and that may be one key reason why they don't consider human extinction so important.

And I mean, in a sense, I find that that's good news, because this is just something that you can inform people about, well, it might not be super easy, but it seems somewhat tractable. Whereas if people had some sort of deeply held moral conviction that extinction isn't that important, then it might have been harder to change people's minds.

Question: Do you know of other ways to shift or nudge people in the direction of intuitively, naturally taking into account the possibility that the future represents?

Stefan: Yeah, there was actually someone else who ran more informal studies where they mapped out the argument for the long-term future. It was broadly similar to the salience manipulation, but it was much more information, and also ethical arguments.

And then, as I recall, that seemed to have a fairly strong effect. So that falls broadly in the same ballpark. So basically, you can inform people more comprehensively.