Articles

How can effective altruism stay curious?

How can effective altruism stay curious?

In this 2018 talk, Will MacAskill invites the participants of EA Global San Francisco to stay curious, and explores the tension between more goal-focused and more exploratory communities. This is a transcript of Will's talk, which we have lightly edited for readability.

I'm excited for the theme of this conference, which is “stay curious”. From the sound of things, there’s quite a lot of curiosity in the room. So, I'm pretty happy about that. What I'm going to talk about is just a little bit on the kinds of the challenges and opportunities, and how to think about building a really open minded community. To do that, I'm going to create a little contrast, going back two and a half thousand years, to two sorts of communities that did exist. And how any community can be like one of these in different ways.

The first is ancient Sparta. Sparta is an amazing city-state, entirely built around trying to build the perfect army. And so, communities in general can be more or less like trying to be an army. The idea is, you've got this single goal. You've really got something you're trying to achieve. If you want to achieve this goal, you want to enforce conformity of beliefs, and conformity of values. Conformity with respect to what the end goals are. You also want a kind of hierarchy as well, so that it's unquestioned who you listen to and whose views are less important. That’s one model I think you can have for the sort of ideal community you can aspire towards.

Second, in contrast, is ancient Athens, and it's very different what the Athenians prized. It was not so much having a goal: instead they were in the marketplace of ideas. It was really cherishing open ended discussion, and following the argument wherever it leads. It was also the case that, there was far less conformity of ideas. It was much more about celebrating diversity, celebrating disagreement, and not the same sort of hierarchy as in Sparta.

Socrates could accost someone in the square, and the idea – at least with the ideals they had – was that the best argument would win. And so when I present these two (Athens and Sparta), the question is, well, what sort of community do we want to be? I bet you're all thinking Sparta because they look badass. No, I'm imagining most of you are thinking in this setup: “Obviously we want to be more like the Athenians and less like the Spartans.” It's true that there were many, many benefits for the kind of Athenian sort of community over the Spartan. One is just, well, what if you're wrong about your goal? What if you're aiming at the wrong thing or at least some of your beliefs are incorrect? It seems like social or intellectual movements in general can suffer from this problem where certain beliefs become indicators of tribal membership.

So if you look at the environmentalist movement, for example, it really cares about environmental destruction, about climate change above anything, but then also one of its core beliefs, at least very commonly, is also being against nuclear power, which from my perspective seems just completely insane. It seems like this is an amazing partial solution to the problem of climate change, but it's been debarred because certain attitudes to nuclear power are just part of what it means to be part of the environmentalist movement. That seems pretty bad.

There are other benefits in favor of the more Athenian approach as well. One is you've got a broader appeal – you’re no longer having to select on a very narrow set of beliefs and preferences or values, and that means you can have a much wider appeal.

Athenian communities are possibly less fragile as well. You avoid the narcissism of small differences, people are more comfortable with disagreement, and so there's perhaps less of a risk from the infighting which so often plagues other social and intellectual movements. But I think the main thing I want to convey is that actually, there is a genuine tension between being Athenian or Spartan. It's a genuinely deep problem. And the main reason is, have you ever tried to get a group of philosophers to do anything? It's extremely difficult! I was at a philosophy conference just a couple of weeks ago and we were trying to get everyone to go to the bar after the conference. It takes about an hour to rally everyone together. Finally, you get people moving in the right direction and they all follow each other, so they don't actually know where they're going. And then we get to the bar and everyone stops outside and just has their conversation. And if you ask them, why have we stopped, they're like, “Oh, I don't know. I guess the, um, you can't get in or something.” No one thought about the very final stage which is opening the door and going in. And this is a problem because we as a community, we really want to achieve things. We think we’ve really got big problems in the world, and we want to solve them.

If there's something that's good about building an army, it’s that they’re very good about doing things. And so, there are real benefits of conformity of beliefs and conformity of values. You get to solve principal agent problems. You know if someone has the same beliefs and values as you, and you tell them to do something, that they're going to pursue that goal. And they’ll pursue it basically the same way you would: you don't have to spend effort and resources checking up on them. Also it means you can have extremely high levels of trust as well. In general, it's actually kind of like the shared aims community that we talked about a lot last year. It's very powerful, the level of cooperation and coordination you can have if everyone has the same beliefs and same values. I also think there are structural reasons for some pressure that pushes communities towards being more like Sparta and less like Athens.

One is just because if there are organizations that are wanting to do something, they're going to want to hire people who have similar worldviews, and have similar beliefs because they're going to be able to work better together. Or if they're providing training or help to someone, it’ll seem like a better deal for them if they can do that to someone who's going to act more like they would think is the right way to act. So again, you're gonna get preferential assistance provided to people who have beliefs and values that are more like the mainstream. Then also, as a community grows, you more and more are going to have to start relying on social epistemology. You're going to have to start deferring to certain people because there’s not enough time for everyone to scrutinize everything all at once.

And again, that's very good! In fact, it's vital. We wouldn't be able to have a sophisticated number of beliefs if we didn't do that, but it becomes much more fragile because a lot of weight is being put on the views of a smaller number of people. So I think it's no surprise that when you look around at different social movements, they often have this Spartan army mentality. I think it’s kind of the way humans are programmed. And that means that we can’t just blindly say: “Oh yeah, of course – Athens – we’re free thinkers. We want to be questioning everything, that's what we're like.” Instead, it's going to require quite a bit more work. And the solution is that we want to try and get the best of both worlds.

In a slogan, I think we want to try and build an army of philosophers. I think I'm the first person to ever have suggested this idea, but I'm going to stand by it. I think there's a few ways in which we can actually make this happen. So one is having a community that – and this is a core part of the effective altruist project – is having a community that's based on a higher order level of meta than many other communities. It's not about any particular belief, but it's about three things which are all quite abstractly stated.

- A decision theoretic idea of trying to maximize rather than just satisfice (or, do “enough”) good.

- An epistemological view which is being very aligned with science, being kind of broadly Bayesian in the way we think about things.

- Set values where firstly, we just think everyone counts equally, and then we also think that promoting wellbeing is among the most important things we could be doing right now.

I think, importantly, that does actually rule out a lot of different possible views in the world. I think it'd be really bad to start defining effective altruism so broadly that everything counts. I have a friend who I asked, what do you think effective altruism is? He said, “I think effective altruism is acting with integrity.” And obviously, that's way too broad. Then this community is just everyone, because everyone wants to aspire to that. But at the same time, there's still a huge possibility to have differing beliefs about how you do the most good and differing values as well because within this kind of broad idea of impartiality and welfare, there's still tons of opportunity to have differences.

One potential solution is noting that what unifies us is a meta-level agreement and that can still unify us even if we have very different views at the object level. Another is then just having really strong coordination norms. Part of the push towards being more like Sparta is because it's so helpful with coordination. It means you can have trust in others. You don't have to check up on people to see how they're doing. But if we have very strong norms where people coordinate, where even if we have very different views, we still trust each other and work together, then the additional benefits of having conformity of beliefs and values are lessened to quite an extent.

I actually think we do really well in this as a community. We emphasized that a lot last year. And then another solution is a cultural one, where people feel trusted and safe in the ability to express more unusual views. You're able to disagree with others without being disagreeable, without getting angry, without feeling like you’re going to be kind of alienated or pushed out or criticized in some way, just for having a different set of views. I think that's extremely important as well. And that's something we want to put the spotlight on for this conference. And so with that in mind, I'm going to propose an experiment that we can all try for this weekend: no idea how it will go, but we'll find out.

Eliezer Yudkowsky once had this blog post about holding a New Day where you don't visit a website you've visited before, you don't read any books you've read before, you just do everything completely new, including when you become aware of yourself using any thought you’ve thunk before, then muse on something else. I was inspired by this. I think we can have the idea of taking one belief, one view that you have that is particularly dear to you, that really is an important assumption to your whole view of the world and try your best, at least for some of the conference, to believe the opposite.

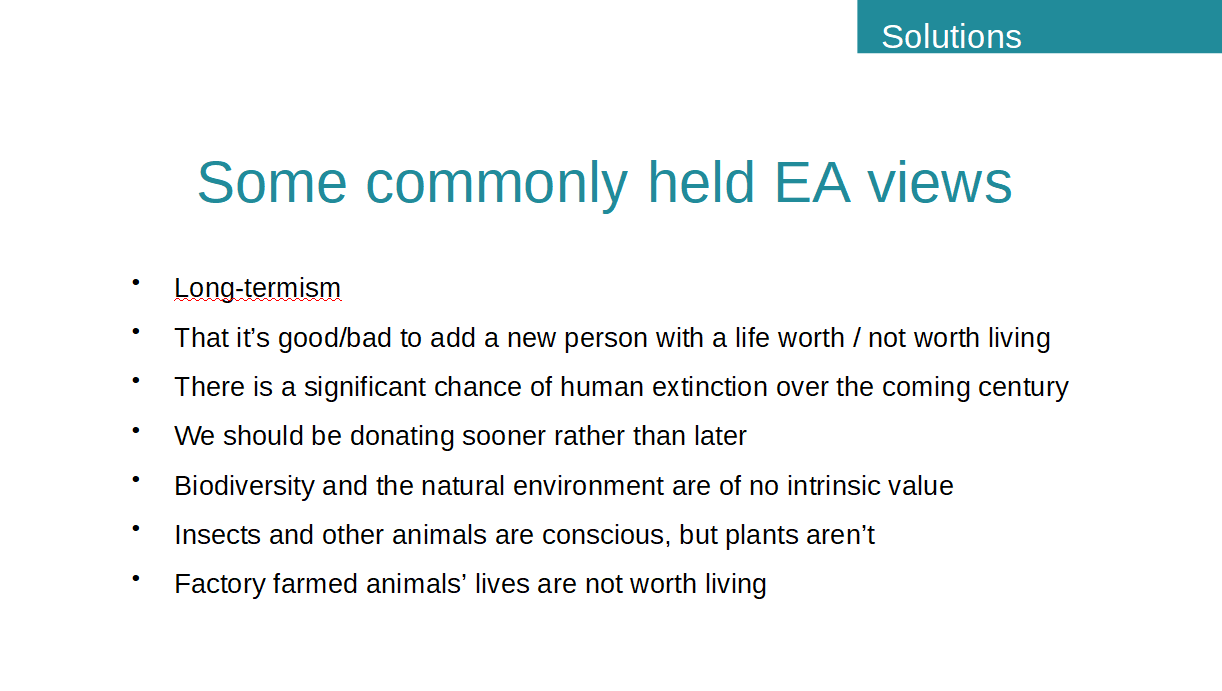

When I first saw this, I was like, this could really be carnage. You've got people not believing in gravity and like walking off the building and so on. Maybe don't go that far. Here are examples of some views that are like particularly commonly held within effective altruism and at least for some of the time you might want to try thinking... perhaps you're at a talk and you think “Well, I'm at this talk that I agree with. How would this look if I had the opposite of this assumption that I'm questioning?” Or, in fact, go to talks that would only make sense for you to go to if you had the opposite of this belief, or deliberately find the people who you disagree with, and don't try and get into an argument about who’s right. Instead, really try and see what the world looks like from that other perspective.

Part of the reason I'm interested in experimenting with this is I think very often, at least when we hold a particular view, it's not so much that we have reasons on one side and the other and assess that, but it's more that the particular view is part of an overall worldview, and it makes sense given that worldview. And then if you can try and switch that you can maybe start to see, wow, how does everything look, how does everything fit together if you have a very different worldview? So I think the approach is actually similar to simulated annealing in machine learning where the ideas, rather than simply optimizing on whatever belief change looks best on the margin, sometimes – regularly – you just do something completely random. And the reason this happens with machine learning is to avoid a local optimum, where you think, well, any particular change I make just now looks worse, looks less plausible, but then that means you're stuck, while there's actually perhaps some better worldview that fits as a package together.

Going back to the list of new ideas we could have, avoid what Scott Alexander calls a “50 more Stalins” approach where – for example – perhaps you're someone who thinks that AI timelines are very short or something. Avoid then saying: “Well, imagine if I thought they were even shorter!” That's not in the spirit of what I'm thinking. Instead try something that is actually quite core to you.

So in my own case, why I'm going to try is, I have a certain view on philosophical methodology, that places a lot of weight on simple theories, elegance and explanatory power, even if that means overturning a lot of our common sense intuitions, and so I'm going to try and invert that. I'm going to try and think, well, how would I start to see the world if I actually thought: “Philosophical arguments don't have a very good track record. Why should I trust this abstract reasoning when my particular intuitions seem so correct to me?” And this is going to be hard for me. It would mean believing that my entire career to date has been a waste of time, but I'm at least going to give it a go.

So if you find me, and you want to accost me in some sort of a philosophical argument, I'll try my best to be an intuitionist rather than one of the theory people. I want you to try and experiment with this over the course of the conference.

This is the idea of staying curious. For this weekend, really try and indulge your curiosity, really try and think “What are the things I really wish I'd figured out? Those assumptions that I've been acting on for quite a while, but that I really want to scrutinize.”

Do you want to attend an EA Global conference? The next conference is taking place in London this October.

Or do you just want to watch more talks like the above?