In the October 2016 EA Newsletter, we discussed Will MacAskill’s idea that discovering some important, but unaddressed moral catastrophe—which he calls “Cause X”—could be one of the most important goals of the EA community. By its very nature, Cause X is likely to be an idea that today seems implausible or silly, but will seem obvious in the future just as ideas like animal welfare or existential risk were laughable in the past. This characteristic of Cause X—that it may seem implausible at first—makes searching for Cause X a difficult challenge.

Fortunately, I think we can look at the history of past Cause X-style ideas to uncover some heuristics we can use to search for and evaluate potential Cause X candidates. I suggest three such heuristics below.

Heuristic 1: Expanding the moral circle

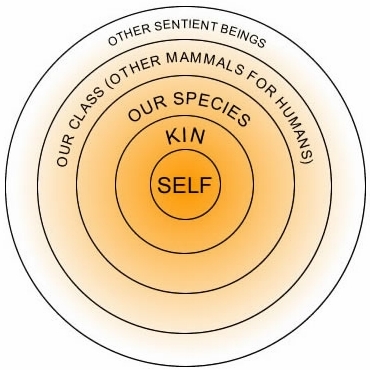

One notable trend in moral thinking since the Enlightenment is the expansion of the set of entities in the world deemed worthy of moral consideration. In Classical Athens, women had no legal personhood and were under the guardianship of their father or other male relative. In ancient Rome, full citizenship was granted only to male Roman Citizens with limited forms of citizenship granted to women, Client state citizens, and freed slaves. Little or no moral consideration was paid to slaves and people outside of Roman rule.

Most societies consider “insider” groups like the family or the tribal group to have moral worth and assign lesser or no moral significance outside that group. Over time, there seems to have been a steady expansion of this moral circle.

In Classical Athens, it seemed obvious and normal that only men should have political rights, that fathers should be allowed to discard unwanted infants, and that slaves should serve their masters. Concepts like "war crimes"—that foreigners had any right to humane treatment—or "women's rights" would have sounded shocking and unnatural.

As the centuries have progressed, there has been a growing sense that categories like gender, nationality, sexuality, and ethnicity don't determine the worth of a person. Movements for the liberation or fairer treatment of slaves, women, children, racial minorities, sexual minorities, and non-human animals went from seeming absurd and intractable, to being broadly accepted. Forms of subjugation that once seemed natural and customary grew controversial and then unacceptable.

In fact, we can think of many of the popular cause areas in effective altruism as targeting beneficiaries that have not yet gained full moral consideration in society. Global poverty expands the moral circle to include people you may never meet in other countries. Animal welfare expands the circle to include non-human animals. And far future causes expand the circle to include sentient beings that may exist in the future. Cause X may follow this trendline by taking the expanding moral circle in unexpected or counterintuitive directions.

Therefore, a natural heuristic for discovering Cause X is to push the trendline further. What sentient beings are being ignored? Could sentience arise in unexpected places? Should we use sentience to determine the scope of moral consideration or should we use some other criteria? Answering these questions may yield candidates for unexplored ways of improving the world.

Read more on the expanding moral circle

Peter Singer discussing the idea of the expanding moral circle

Singer's book: The Expanding Circle

Examples

I’ve divided examples of this heuristic in action into two categories. The first is the intellectual challenge of figuring out which things we ought to care about. The second is the personal challenge of widening your circle of concern for others.

Expanding the intellectual moral circle

One way to find a new Cause X is to expand the set of things that we recognize as mattering morally. Below is a list of examples of this heuristic in action. Inclusion here does not necessarily imply an endorsement of the idea.

Wild-Animal Suffering

This paper takes the common idea that we ought to have some concern for nonhuman animals directly affected by humans (e.g. factory farmed animals or pets) and extends it to include suffering in wild animals. Due to the enormous number of wild animals and the suffering they undergo, the paper argues that “[o]ur [top] priority should be to ensure that future human intelligence is used to prevent wild-animal suffering, rather than to multiply it.”

The Importance of Wild-Animal Suffering

Reading time: ~30m1

Insect Suffering

If you agree that wild animals fall inside our moral circle, then one area of particular concern is the suffering of insects. Given that there are an estimated 1 billion insects for every human alive, consideration of insect suffering may matter a great deal if insects matter morally.

The Importance of Insect Suffering

Reading time: ~12m

The ethical importance of digital minds

Whatever it is that justifies including humans and animals in our scope of moral concern may exist in digital minds as well. As the sophistication and number of digital minds increases, our concern for how digital minds are treated may need to increase proportionally.

Do Artificial Reinforcement-Learning Agents Matter Morally?

Reading time: ~1h

Which Computations Do I Care About?

Reading time: ~1h

Expanding the personal moral circle

In addition to expanding the intellectual moral circle we can expand our personal moral circle by increasing the amount of empathy, compassion and other pro-social behaviors that we exhibit towards others. An example of this is below although more research into this area is needed.

Using meditation to increase pro-social behaviors

Meditation, especially mindfulness, loving-kindness and compassion meditation appears to have positive effects on a large number of pro-social behaviors. Emerging research suggests positive effects on behavior in prosocial games__, self-compassion and other-focused concern__, positive emotions, life satisfaction, decreases in depressive symptoms__, and decreasing implicit intergroup bias__.

Loving Kindness and Compassion Meditation: Potential for Psychological Interventions

Reading time: ~22m

Heuristic 2: Consequences of technological progress

A second heuristic for finding a Cause X is to look for forthcoming technological advances that might have large implications for the future of sentient life. Work that focuses on the safety of advanced artificial intelligence is one example of how this approach has been successfully applied to find a Cause X.

This approach does not necessarily need to focus on the downsides of advanced technology. Instead, it could focus on hastening the development of particularly important or beneficial technologies (e.g. cellular agriculture) or on helping to shape the development trajectory of a beneficial technology to maximize the positive results and minimize the negative results (e.g. atomically precise manufacturing).

More on the consequences of technological progress

An introduction to transhumanism

Nick Bostrom's Letter from Utopia

Available here

Reading time: ~10m

Open Philanthropy Project’s Global Catastrophic Risks focus area

Available here

Basic intro reading time: ~2m

Examples

I’ve included some examples of this heuristic in action below. Inclusion here does not necessarily imply an endorsement of the idea.

Embryo selection

“Human capital is an important determinant of individual and aggregate economic outcomes, and a major input to scientific progress.... In this article, we analyze the feasibility, timescale, and possible societal impacts of embryo selection for cognitive enhancement. We find that embryo selection, on its own, may have significant (but likely not drastic) impacts over the next 50 years, though large effects could accumulate over multiple generations. However, there is a complementary technology – stem cell-derived gametes – which has been making rapid progress and which could amplify the impact of embryo selection, enabling very large changes if successfully applied to humans.”2

Embryo Selection for Cognitive Enhancement: Curiosity or Game-changer?

Reading time: ~16m

Atomically Precise Manufacturing (APM)

“Atomically precise manufacturing is a proposed technology for assembling macroscopic objects defined by data files by using very small parts to build the objects with atomic precision using earth-abundant materials.... If created, atomically precise manufacturing would likely radically lower costs and expand capabilities in computing, materials, medicine, and other areas. However, it would likely also make it substantially easier to develop new weapons and quickly and inexpensively produce them at scale with an extremely small manufacturing base.”3

Risks from Atomically Precise Manufacturing

Reading time: ~15m

Molecular engineering: An approach to the development of general capabilities for molecular manipulation

Reading time: ~14m

Animal Product Alternatives

“More than eight billion land animals are raised for human consumption each year in factory farms in the U.S. alone. These animals are typically raised under conditions that are painful, stressful, and unsanitary. Successfully developing animal-free foods that are taste- and cost-competitive with animal-based foods might prevent much of this suffering.”4

Scenarios for Cellular Agriculture

Reading time: ~10m

Animal Product Alternatives

Reading time: ~25m

Eliminating the biological substrates of suffering

“Two hundred years ago, powerful synthetic pain-killers and surgical anesthetics were unknown. The notion that physical pain could be banished from most people's lives would have seemed absurd. Today most of us in the technically advanced nations take its routine absence for granted. The prospect that what we describe as psychological pain, too, could ever be banished is equally counterintuitive. The feasibility of its abolition turns its deliberate retention into an issue of social policy and ethical choice.”5

The Hedonistic Imperative

Reading time: ~3h

Heuristic 3: Crucial Considerations

A final heuristic is to look for ideas or arguments that might necessitate a significant change in our priorities. Nick Bostrom calls these ideas “crucial considerations” and explains the concept as follows:

“A thread that runs through my work is a concern with "crucial considerations." A crucial consideration is an idea or argument that might plausibly reveal the need for not just some minor course adjustment in our practical endeavours but a major change of direction or priority.

If we have overlooked even just one such consideration, then all our best efforts might be for naught—or less. When headed the wrong way, the last thing needed is progress. It is therefore important to pursue such lines of inquiry as might disclose an unnoticed crucial consideration.”6

A good example of the use of this heuristic is the argument for focusing altruistic efforts on improving the far future. The key insights in the argument are that 1) the total value in the future may vastly exceed the value today and 2) we may be able to affect the far future. The core argument is relatively simple but has enormous implications for what we ought to do. Finding similar arguments is a promising method to discover Cause X.

Examples

I’ve included some examples of this heuristic in action below. Inclusion here does not necessarily imply an endorsement of the idea.

The simulation argument

“This paper argues that at least one of the following propositions is true: (1) the human species is very likely to go extinct before reaching a “posthuman” stage; (2) any posthuman civilization is extremely unlikely to run a significant number of simulations of their evolutionary history (or variations thereof); (3) we are almost certainly living in a computer simulation. It follows that the belief that there is a significant chance that we will one day become posthumans who run ancestor‐simulations is false, unless we are currently living in a simulation. A number of other consequences of this result are also discussed.”7

Are you living in a computer simulation?

Reading time: ~20m

Infinite ethics

“Cosmology shows that we might well be living in an infinite universe that contains infinitely many happy and sad people. Given some assumptions, aggregative ethics implies that such a world contains an infinite amount of positive value and an infinite amount of negative value. But you can presumably do only a finite amount of good or bad. Since an infinite cardinal quantity is unchanged by the addition or subtraction of a finite quantity, it looks as though you can't change the value of the world. Aggregative consequentialism (and many other important ethical theories) are threatened by total paralysis.”8

Infinite ethics

Reading time: 1h15m

Civilizational stability

"If a global catastrophe occurs, I believe there is some (highly uncertain) probability that civilization would not fully recover (though I would also guess that recovery is significantly more likely than not). This seems possible to me for the general and non-specific reason that the mechanisms of civilizational progress are not understood and there is essentially no historical precedent for events severe enough to kill a substantial fraction of the world’s population. I also think that there are more specific reasons to believe that an extreme catastrophe could degrade the culture and institutions necessary for scientific and social progress, and/or upset a relatively favorable geopolitical situation. This could result in increased and extended exposure to other global catastrophic risks, an advanced civilization with a flawed realization of human values, failure to realize other “global upside possibilities,” and/or other issues.” 9

The Long-Term Significance of Reducing Global Catastrophic Risks

Reading time: ~18 minutes

Conclusion

Finding Cause X is one of the most valuable, but challenging things the EA community could accomplish. The challenge comes from two sources. The first is the enormous technical challenge of finding an extremely important, plausible and unexplored cause. The second is the emotional challenge of engaging with plausible Cause X candidates. This requires careful calibration between being appropriately skeptical about counterintuitive ideas on the one hand and being sufficiently open-minded to spot truly important ideas on the other.

Given this challenge, one way the EA community can make progress on Cause X is to create the kind of intellectual community that can engage sensibly with these ideas. Our results so far are promising, but there is much more to be done.

How to take action

If you’re interested in helping to discover Cause X, there are a few concrete actions you can take:

Career

If you’re likely to be exceptionally good at research, you could tailor your career to producing valuable and groundbreaking research. Some useful links:

- 80,000 Hours’ career profile on Valuable Academic Research

- Interview with Nick Bostrom on how to make a difference in research

- How to do high impact research

If you’re not likely to be a high-impact researcher yourself, some options are:

- High impact research management

- Working at a Think Tank

- Funding high impact research either as a foundation grantmaker, or by funding excellent research organizations like MIRI, FHI or the Foundational Research Institute.

Community

Engaging with the EA community can also help expose you to interesting ideas and help you find collaborators to expand those ideas further. Some useful links:

- The Effective Altruism Forum

- Find or start a local EA group

- If you’re mathematically-inclined you can find or start a MIRIx workshop

- Attend EA Global, or a local EAGx conference

Get Involved in Effective Altruism

Footnotes

-

Reading time calculations roughly follow Medium’s reading time calculation of taking the total words in the article and dividing that by the average adult reading speed (275 words per minute). I ignored images to make calculating this easier. ↩

-

Shulman K. & Bostrom, N. (2014) ‘Embryo Selection for Cognitive Enhancement: Curiosity or Game-changer?’, Global Policy, Vol. 5, No. 1, pp. 85-92. ↩

-

Beckstead, N. (2015). “Risks from Atomically Precise Manufacturing.” Open Philanthropy Project Retrieved October 30, 2016, from http://www.openphilanthropy.org/research/cause-reports/atomically-precise-manufacturing. ↩

-

Beckstead, N. (2015). “Animal Product Alternatives.” Open Philanthropy Project retrieved October 30, 2016 from http://www.openphilanthropy.org/research/cause-reports/animal-product-alternatives ↩

-

Pearce, D. (1995) The Hedonistic Imperative, retrieved October 30, 2016 from http://happymutations.com/ebooks/david-pearce-the-hedonistic-imperative.pdf ↩

-

Bostrom, N. Retrieved from http://www.nickbostrom.com/ ↩

-

Bostrom, N. (2003). ‘Are you Living in a Computer Simulation?’, Philosophical Quarterly vol.53, No. 2011, pp. 243-255 ↩

-

Bostrom, B. (2011) ‘Infinite Ethics’ Analysis and Metaphysics vol. 10, pp. 9-59. Summary retrieved on October 30, 2016 on http://www.nickbostrom.com/ ↩

-

Beckstead, N. (2015) "The long-term significance of reducing global catastrophic risks." The GiveWell Blog retrieved November 1, 2016 from http://blog.givewell.org/2015/08/13/the-long-term-significance-of-reducing-global-catastrophic-risks/ ↩